We're loading the full news article for you. This includes the article content, images, author information, and related articles.

Global tech leaders are racing to build massive “AI factories” – sprawling data centers packed with GPUs and powered by gigawatts of electricity – to train the next generation of artificial intelligence.

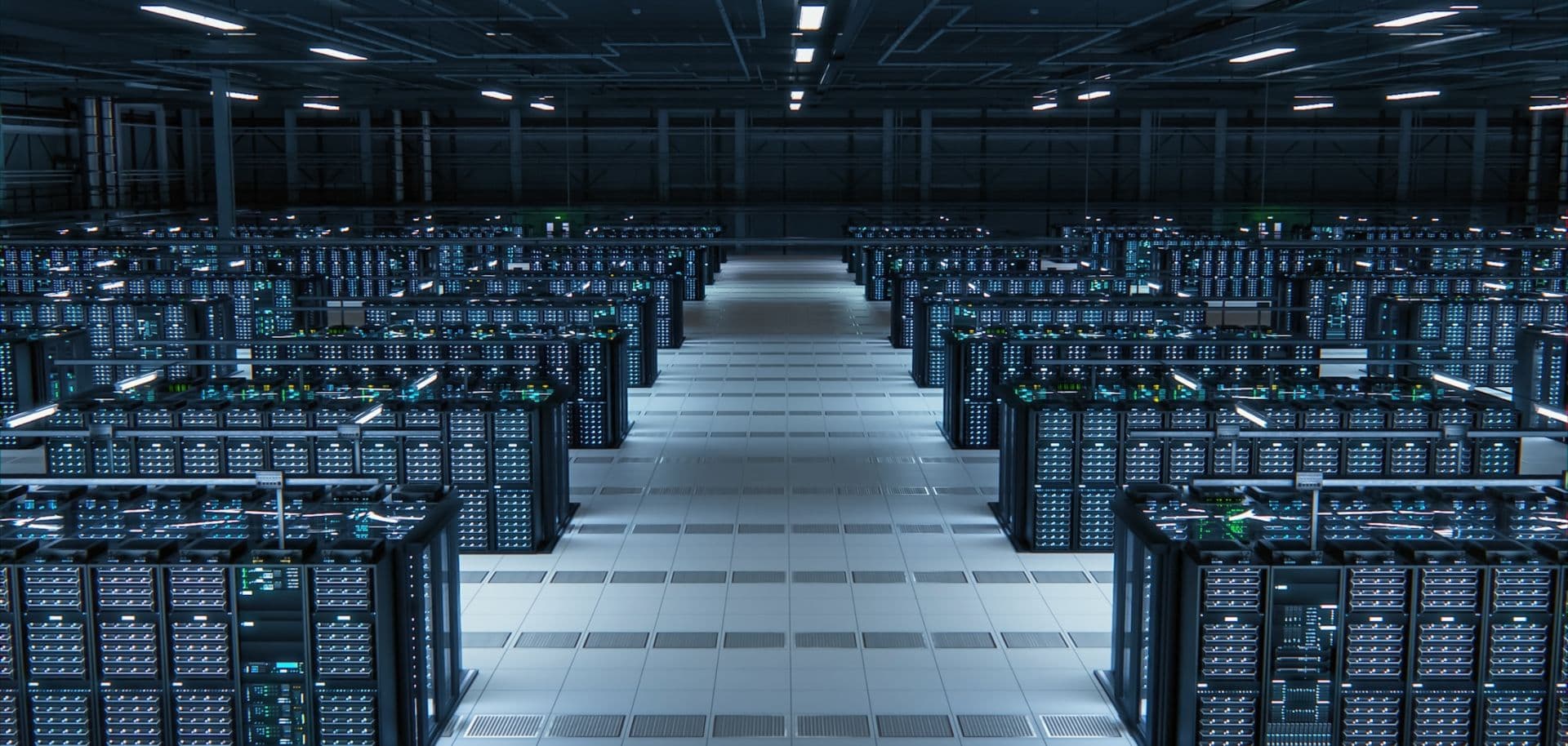

Global tech leaders are racing to build massive “AI factories” – sprawling data centers packed with GPUs and powered by gigawatts of electricity – to train the next generation of artificial intelligence. These new facilities dwarf previous computing installations. For example, Elon Musk’s xAI built its Colossus cluster from 100,000 to 200,000 Nvidia GPUs in just a few months , making it “the largest AI training platform in the world” . Even this single site’s scale rivals national efforts: China’s government reports 246 exaflops of AI computing as of mid‑2024 . Today’s top AI data centers often cover hundreds of acres and demand hundreds of megawatts (and up to gigawatts) of power, along with cutting-edge cooling and networking. Below we rank and profile the world’s biggest AI-focused data centers – built or planned – by power, GPU count, cooling, and connectivity.

OpenAI’s Stargate Initiative: In the U.S., OpenAI’s Stargate program (with Oracle and SoftBank) is a defining project. As of late 2025 it spans nearly 7 gigawatts (GW) of planned data center capacity – already about two-thirds of its 10 GW goal – with roughly $400 billion invested . Wired notes that 7 GW is roughly “the same amount of power as seven large-scale nuclear reactors” . These sites are designed for extreme density: industry observers expect 100–150 kW per rack , implying tens of thousands of GPU servers per campus. OpenAI reports the five new U.S. sites (in Texas, New Mexico, Ohio and the Midwest) will create ~25,000 on‑site jobs. NVIDIA is pledging huge GPU supplies – one briefing cited 400,000+ GPUs at Abilene, TX alone – which combined with Oracle’s halls could eventually drive these centers over the 10 GW mark.

xAI’s Colossus (Memphis): Elon Musk’s xAI built Colossus as an “in-house compute cluster” in Tennessee . Colossus 1 (and now Colossus 2) is exploding in scale: it surged to 100,000 GPUs in 122 days and then 200,000 more in just 92 days . It already runs on ~200 megawatts (MW) of power and plans to exceed 1 GW of capacity by early 2026 – a build-out faster than any rival. CRE Daily reports Colossus 2 will host over 1,000 MW at full build, far ahead of Google or Anthropic clusters . (By comparison, the original Colossus 1 would have needed ~150 MW when complete .) Such scale attracted scrutiny: xAI had to add dozens of local gas turbines (and even bought a Duke Energy plant) to supply over 200 MW extra capacity .

Microsoft’s Fairwater (Wisconsin): Microsoft is investing $7+ billion in a new AI campus called Fairwater, opening 2026. It’s “the world’s most powerful AI datacenter” by Microsoft’s account . Fairwater covers 315 acres with three 1.2M‑sq ft buildings . It will house hundreds of thousands of the latest NVIDIA GPUs in a single flat network – connected by so much fiber that it “could wrap the planet four times” – delivering 10× the performance of today’s fastest supercomputer .

Aerial view of Microsoft’s new Fairwater AI datacenter in Wisconsin . This 315‑acre facility will contain three 1.2M‑sq‑ft halls and “hundreds of thousands” of GPUs connected into one supercomputer .

Unlike normal cloud centers, Fairwater behaves as a single giant machine: all racks are fully interconnected at low latency . Microsoft’s engineers say each rack will hold 72 NVIDIA Blackwell GPUs (14 TB memory, 1.8 TB/s NVLink within rack) . In effect, every rack is a giant accelerator capable of ~865,000 tokens/sec of throughput . To cool this density, over 90% of Fairwater uses advanced liquid cooling. Chilled water is pumped through the racks in a closed-loop system with no water loss . The hot water is then sent to two 20‑ft fan arrays (172 fans total) to be recirculated . This system (the second-largest water-cooled chiller plant on Earth ) cools the facility with minimal water use – equivalent to a restaurant’s yearly use despite the center’s scale .

Interior of a high-density GPU cluster in Microsoft’s Wisconsin datacenter. Each rack packs 72 Nvidia GPUs with NVLink (14 TB memory) and must be liquid-cooled .

Amazon’s Project Rainier: Amazon Web Services is building Project Rainier, a multi-DC UltraCluster for Anthropic’s AI. It will “connect hundreds of thousands of Trainium2 chips across the U.S.” . AWS says Rainier will be the “world’s most powerful computer” for training, about 5× the compute of Anthropic’s current biggest cluster . The design uses new UltraServers (4×Trainium2 servers with 64 chips total) linked by proprietary “NeuronLink” fabric , and EFA networking across sites . Rainier is spread across multiple AWS data centers (unlike a single campus) but its scale rivals any single AI center.

Google’s India AI Hub: In Asia, Google announced a $15 billion AI data center project in Visakhapatnam, India. The campus will be Google Cloud’s largest AI hub outside the U.S. , with an initial capacity of 1 GW . (State and company officials suggest it could generate ~188,000 jobs.) This reflects Google’s bet on the rising demand for generative AI in India. (Microsoft and Amazon have also been investing heavily in new Indian data centers for cloud AI services .)

AI-driven data center expansion is truly global.

UAE’s Stargate (Abu Dhabi): Abu Dhabi’s G42 (with SoftBank, Nvidia, OpenAI, Cisco, Oracle) is planning a 5 GW “Stargate” AI campus in the UAE. G42 says the first 200 MW should be online in 2026, as the initial phase of about 1 GW . They are in talks to scale the remainder with foreign partners. Like the U.S. Stargate, this uses liquid cooling and green power. The project will be powered by a mix of nuclear, solar and gas energy . (Final permits are pending; U.S. review of export controls is also playing a role.)

China: China is accelerating its own AI data center build. For example, Shanghai plans five new large-scale AI data centers by 2025 to add over 100 exaflops of computing . (China’s total AI computing was 246 exaflops in mid-2024 , second only to the U.S.) Nationwide, local governments and state firms have launched dozens of AI/cloud DC projects in 2024–25. The goal is to keep pace with domestic AI firms (like Alibaba, Baidu, Huawei, etc.) as well as national initiatives (e.g. Lingjie and Peng Cheng supercomputers).

Europe: In Europe, one of the largest planned AI centers is in the UK. QTS (Blackstone) broke ground on a £10 billioncampus in Northumberland – a 540,000 m² site touted as “Europe’s largest AI data centre” . It will deploy closed-loop liquid cooling throughout to recirculate water and waste heat efficiently. (The plant was approved in 2025 after years of planning .) In addition, major DC projects are advancing across Europe: for example Microsoft’s Zurich-West site includes AI-optimized halls , and Blackstone’s Aragon, Spain, project will eventually offer 300 MW of capacity . Google is also expanding in Europe (a €1 billion AI-focused DC in Belgium was announced in 2025). All these projects will tie into grids with increasing renewable share.

What powers and cools these behemoths? New AI centers use massive energy and cooling infrastructures. For power, planners combine grid upgrades, renewables and on-site generation. For example, Texas projects (ERCOT) are lining up private wires to gas plants and battery farms to add tens of GW quickly . In Ohio and PJM markets, grid upgrades and substation expansions are needed . Many campuses will rely on a mix of power sources: renewable PPAs, utility hookups, and on-site gas turbines (often hydrogen-capable) or diesel for backup. U.S. Stargate partners leverage wind/solar PPAs and battery storage, while SoftBank’s SB Energy group will supply and manage energy for its sites . Long-term, even small modular nuclear reactors (SMRs) or large wind/solar arrays are being considered to supply multiple gigawatts of demand . Notably, G42’s UAE AI campus explicitly plans to tap nuclear and solar generation alongside gas .

Cooling is equally extreme. At 100+ kW per rack, air cooling alone won’t cut it. All these centers use advanced liquid cooling: direct-to-chip and rear-door heat exchangers are standard, and some may even use immersion cooling for the hottest clusters . For example, Microsoft’s Fairwater pumps coolant through each rack and recirculates it with giant chillers , while Google and Facebook sites similarly rely on chilled-liquid systems. Backbone water systems are sized for multi-gigawatt loads, with economizers and heat-recovery loops used where possible . The result is that, paradoxically, these sprawling data centers may use less water per MW than older sites: Fairwater’s 90% closed-loop design uses roughly as much water per year as a single restaurant .

Networking and data movement are also pushed to the limit. Tens of thousands of GPUs are linked by ultra‑high‑speed fabrics. Microsoft reports Fairwater uses an intra‑pod Ethernet/InfiniBand fabric at 800 Gbps full‑fat‑tree across thousands of GPUs . To scale further, companies are also building “AI WANs” to link multiple data centers at low latency . Internally, racks use NVLink/NVSwitch (in Nvidia-based systems) or AWS NeuronLink (in Amazon’s UltraServers) for GPU‑to‑GPU traffic . All told, the design goal is a single giant supercomputer spanning a campus (or even multiple sites) with minimal latency.

In sum, these emerging data centers are in many ways the ultimate cyber-infrastructure: buildings large as factories, with electrical and cooling infrastructure to match, and dozens of petaflops of compute inside. They exist to fulfill the promise of AI – from modeling climate change to designing medicines – by “giving AI the compute to power it,” as OpenAI’s Sam Altman puts it . Their completion marks a transformational step: a new era where human ingenuity in data center engineering fuels breakthrough AI discovery.

Keep the conversation in one place—threads here stay linked to the story and in the forums.

Other hot threads

E-sports and Gaming Community in Kenya

Active 8 months ago

The Role of Technology in Modern Agriculture (AgriTech)

Active 8 months ago

Popular Recreational Activities Across Counties

Active 8 months ago

Investing in Youth Sports Development Programs

Active 8 months ago

Key figures and persons of interest featured in this article