We're loading the full news article for you. This includes the article content, images, author information, and related articles.

In the midst of the current artificial‑intelligence boom, researchers and investors often rely on benchmark scores as proxies for progress.In the midst of the current artificial‑intelligence boom, researchers and investors often rely on benchmark scores as proxies for progress.

In the midst of the current artificial‑intelligence boom, researchers and investors often rely on benchmark scores as proxies for progress. Yet many widely used tests measure little more than pattern recognition or rote memorization. To chart a path toward artificial general intelligence (AGI) — systems that can adapt to novel tasks with minimal guidance — we need metrics that reflect fluid intelligence, not just accumulated knowledge. This need inspired the Abstraction and Reasoning Corpus (ARC) and its successor benchmark, ARC‑AGI. Born from François Chollet’s 2019 paper On the Measure of Intelligence, ARC‑AGI has become a focal point of the open AGI movement, attracting competitions, research funding and debate . This article examines the company behind ARC‑AGI, why the benchmark was created, how it works, how it has influenced the AI boom, and where the journey to AGI goes next.

The ARC‑AGI benchmark is maintained by the ARC Prize Foundation, a nonprofit organization formed in 2025. Its mission is to accelerate the development of artificial general intelligence by creating human‑calibrated benchmarks that measure a system’s ability to adapt to problems that are trivial for people but challenging for machines. The foundation views its benchmarks not simply as tests but as driving forces that incentivize researchers to explore new approaches beyond pattern matching . By keeping the organization independent and open‑source, the founders aim to align incentives with public good rather than corporate agendas .

ARC Prize Foundation is led by François Chollet, creator of the Keras deep‑learning library and a long‑time advocate for measuring intelligence rather than skill. Chollet co‑founded the foundation with Mike Knoop, a technology entrepreneur, and Greg Kamradt, who serves as president . Chollet originally proposed the ARC benchmark in his 2019 paper, arguing that standard AI evaluations focused on skill at specific tasks and ignored the role of prior knowledge and experience . He posited that intelligence should be defined as skill‑acquisition efficiency — how quickly a system converts experience and minimal priors into new capabilities . To test this definition, he introduced ARC, a collection of grid‑based puzzles designed to mimic innate human reasoning .

Traditional AI benchmarks, from board games to multiple‑choice exams, often reward systems that accumulate massive amounts of data and compute. Chollet argued that such tests conflate skill with intelligence: unlimited priors allow developers to “buy” arbitrary skill levels for a model, masking its intrinsic generalization power . To push the field toward AGI, he proposed evaluating how efficiently a learner acquires new skills under limited priors and experience . The ARC benchmark was built to embody this philosophy.

The ARC Prize Foundation aims to quantify the capability gap between human and artificial intelligence. Its mission statement notes that the organisation creates and curates benchmarks to “identify, measure, and ultimately close the capability gap between human and artificial intelligence on tasks that are simple for people yet remain difficult for even the most advanced AI systems today” . By focusing on tasks that ordinary people can solve effortlessly, the benchmark provides a clear signal of how far AI still has to go.

ARC‑AGI quickly evolved into a series of competitions. The inaugural 2020 Kaggle contest saw the winning team reach only 21 % accuracy on the evaluation set —clear evidence of the benchmark’s difficulty. Subsequent “ARCathon” competitions in 2022 and 2023 attracted hundreds of teams from dozens of countries and gradually improved scores to 30 % . In 2024, the ARC Prize expanded into a broader contest with over $125K in prizes and a top private‑set score of 53 % . By 2025 the ARC Prize Foundation had become a full nonprofit with a $725K prize pool, underscoring the benchmark’s growing influence .

ARC‑AGI assesses fluid intelligence by restricting tasks to cognitive building blocks that all humans acquire early in life. The design deliberately avoids cultural artifacts like language or domain‑specific knowledge . Instead, tasks rely on core knowledge priors such as object permanence, numerosity and symmetry. This ensures a fair comparison between humans and AI: success reflects genuine reasoning rather than training on vast text corpora .

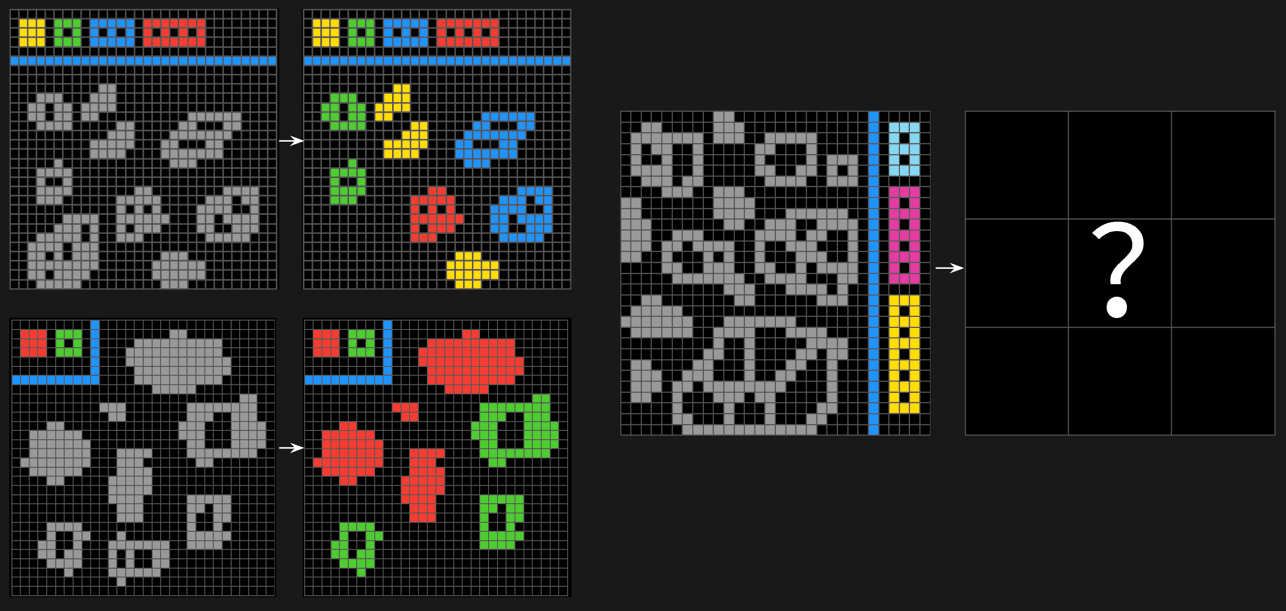

The benchmark follows the principle “Easy for humans, hard for AI.” Each ARC task consists of a handful of input–output examples represented as colored grids. The challenge is to infer the underlying transformation and apply it to a new test grid. Humans can intuitively recognize patterns like reflection, counting or line extension; current AI models struggle to generalize from few examples . Because tasks in the evaluation set are kept secret and unique, developers cannot hard‑code solutions . This enforces developer‑aware generalization, meaning models must truly learn rather than memorize.

ARC‑AGI tasks are essentially program‑synthesis problems. The ideal solver would infer a small program in a domain‑specific language that transforms the inputs into the outputs . Performance is measured as the fraction of tasks correctly solved; a human‑level solver is expected to achieve around 60 % accuracy . Later versions introduce an efficiency component: ARC‑AGI‑2 requires models to solve tasks for less than $0.42 per task, reflecting the energy and computational constraints of human cognition .

Since 2019, ARC‑AGI has served both as a north star and a mirror for AI research. On one hand, it catalyzes novel approaches like program synthesis, test‑time adaptation and chain‑of‑thought prompting. On the other, it exposes limitations in current models. Early deep‑learning models achieved single‑digit scores; however, specialized reasoning systems have recently pushed performance dramatically upward. OpenAI’s o3 model scored 87.5 % on ARC‑AGI‑1 in a high‑compute setting , tripling its predecessor’s performance and highlighting a step‑change in reasoning capabilities. Yet even this success came with caveats: the benchmark’s creator warns that newer ARC‑AGI‑2 tasks, which are more diverse and efficiency‑constrained, could reduce o3’s accuracy to below 30 % .

The AI community has responded enthusiastically to ARC‑AGI competitions. Competitors have built domain‑specific languages, search algorithms and self‑reflection loops to synthesize candidate programs. In 2024 OpenAI’s o3 preview produced a step‑function increase in ARC scores, while 2025 results from the startup Poetiq reportedly matched or exceeded human averages on some private sets . Poetiq combined large language models with iterative loops that generate, evaluate and refine proposed solutions . These advances suggest that test‑time adaptation—adjusting the model’s reasoning process on the fly—is becoming a dominant strategy . The benchmark thus pushes research toward models that can synthesize programs dynamically rather than simply generating text.

As more teams optimize specifically for ARC‑AGI, the benchmark’s public datasets have shown signs of saturation. Reports note that some models achieve high scores on public tasks but drop substantially on semi‑private sets due to data contamination—when benchmark examples leak into training data . This pattern echoes earlier AI benchmarks that were “overfitted” by large models. Chollet and colleagues emphasize that solving ARC‑AGI‑1 is necessary but not sufficient for AGI ; the ability to create new, hard tasks faster than models can learn them is essential to continue measuring progress.

While ARC‑AGI excels at evaluating abstract pattern recognition, critics argue that its grid puzzles may not reflect the full breadth of human intelligence. Tasks lack language, social reasoning, motor control or the ability to interact with the physical world. As Sri Ambati of H2O.ai notes, benchmarks like MMLU allow straightforward comparisons but fail to capture intelligence in practice . Even ARC‑AGI, with its puzzles, measures reasoning in isolation. Real‑world AI agents must gather information, execute code and adapt across domains .

The limitations of ARC‑AGI have spurred the development of broader benchmarks. GAIA(General‑Artificial‑Intelligence‑Agents) is a collaboration among Meta, HuggingFace, FAIR and the AutoGPT community. Unlike ARC‑AGI, GAIA evaluates models on multi‑step tasks requiring web browsing, code execution and multimodal understanding . Its questions range from simple five‑step problems to challenges involving fifty sequential actions . Initial results show that a flexible agent architecture can reach 75 % accuracy, outperforming large corporate models . GAIA demonstrates that intelligence assessments must evolve beyond static puzzles to capture real‑world problem‑solving.

The ARC Prize Foundation continues to refine its benchmarks. ARC‑AGI‑2 introduces efficiency constraints and a larger, hidden evaluation set, making data contamination more difficult . ARC‑AGI‑3 (currently in preview) explores interactive tasks and may include action efficiency, where models must plan sequences to reach a goal. These iterations aim to keep the benchmark “easy for humans, hard for AI” even as models improve.

ARC‑AGI’s open competitions will remain crucial for democratizing AGI research. By publishing training sets and hosting Kaggle contests, the foundation enables teams worldwide to test novel ideas and share code. The 2025 ARC Prize set a $725K prize for achieving 85 % accuracy on ARC‑AGI‑2 at a cost of $0.42 per task , a challenge that encourages efficiency and resourcefulness. Such incentives attract diverse participants and accelerate innovation.

Ultimately, ARC‑AGI is just one piece of the AGI puzzle. Measuring human‑like intelligence requires assessing not only abstract reasoning but also perception, tool use, social understanding and embodied interaction. The field is moving toward agent‑based benchmarks like GAIA that test models in realistic workflows . Chollet’s own paper acknowledges that ARC is a work in progress and offers suggestions for alternatives, such as teacher–student systems where tasks are generated dynamically . Long‑term success may require open‑ended benchmarks that evolve in tandem with AI capabilities.

ARC‑AGI has reshaped how researchers think about measuring intelligence. By focusing on tasks that are easy for humans but hard for machines, it exposes the gap between narrow AI and general reasoning. The ARC Prize Foundation’s nonprofit model and open competitions foster transparency and collaboration, aligning progress toward AGI with the public interest. Yet the benchmark’s own successes highlight its limitations: as models approach human‑level performance on grid puzzles, new tests like GAIA push the frontier into real‑world problem‑solving. The journey to AGI will likely require a suite of evolving benchmarks, each illuminating a different facet of intelligence. ARC‑AGI stands out as a crucial milestone on this path — a catalyst that challenges developers to build systems that can learn to thinkrather than merely memorize.

|

Year |

Key event |

Evidence |

|---|---|---|

|

2019 |

François Chollet introduces the ARC benchmark in On the Measure of Intelligence and hypothesizes it will be difficult to beat |

ARC Prize timeline notes that ARC‑AGI was introduced in Chollet’s 2019 paper |

|

2020 |

First ARC‑AGI competition on Kaggle; winning team achieves 21 % accuracy |

The ARC timeline reports that the 2020 Kaggle competition winner “ice cuber” achieved a 21 % success rate |

|

2022 |

ARCathon 2022 hosts 118 teams from 47 countries; Michael Hodel wins using a domain‑specific language for ARC |

The timeline notes that ARCathon 2022 attracted teams worldwide and that Michael Hodel developed one of the best ARC‑AGI DSLs |

|

2023 |

ARCathon 2023 sees 265+ teams and a top score of 30 % |

Two teams tied for first place with 30 % accuracy on the private evaluation set |

|

2024 |

ARC Prize 2024 awards $125K; top score reaches 53 % |

The 2024 competition ended with a 53 % top score on the private evaluation set |

|

2025 |

ARC Prize becomes a nonprofit; ARC‑AGI‑2 forms the basis of a $725K competition |

The ARC timeline states that in 2025 the ARC Prize grew into a nonprofit and launched ARC‑AGI‑2 with a large prize pool |

Keep the conversation in one place—threads here stay linked to the story and in the forums.

Sign in to start a discussion

Start a conversation about this story and keep it linked here.

Other hot threads

E-sports and Gaming Community in Kenya

Active 9 months ago

The Role of Technology in Modern Agriculture (AgriTech)

Active 9 months ago

Popular Recreational Activities Across Counties

Active 9 months ago

Investing in Youth Sports Development Programs

Active 9 months ago